Advanced Features¶

OrderFlow has many other features that have not yet been covered in this document. This section aims to introduce a few of them, guided by their position in the desktop user interface.

Import¶

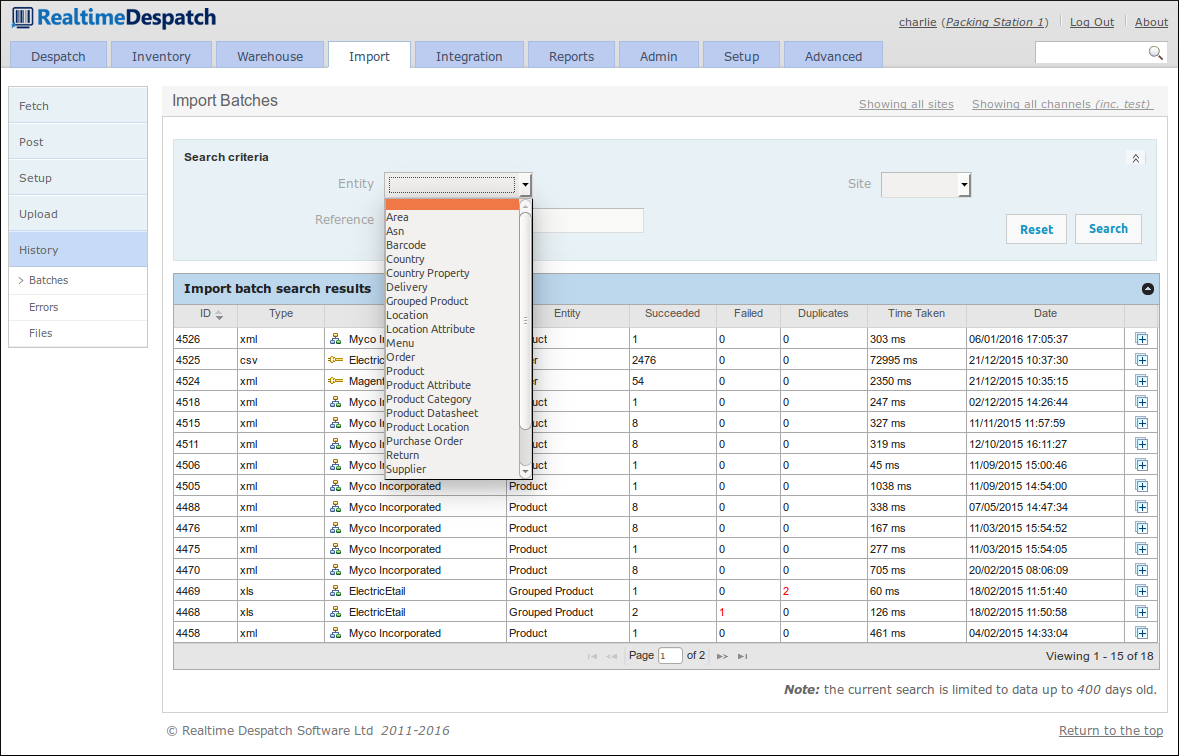

As we have already seen, OrderFlow has the potential to require a large amount of configuration data in order to support the more complex warehouse layouts and processes. Keying-in this configuration data would be time-consuming and prone to error, so it is made possible for such setup / configuration data to be imported from spreadsheets etc.

In addition to configuration data, transactional data such as orders needs to be imported, again to avoid having to key-in such data. OrderFlow supports many different data import mechanisms and formats, with the aim of being flexible enough to integrate with other systems with the minimum of effort.

For example, it is possible to import orders from comma-separated value (CSV) files, spreadsheets, or richer data formats such as XML or JSON. Imported data can be automatically transformed into more-expected formats so that OrderFlow can process it more easily. OrderFlow can be pushed orders from a client that uses OrderFlow’s application programming interface (API), or that places files on its FTP server, for example. Alternatively, OrderFlow can be configured to pull orders from a remote system (e.g. a shopping cart).

The history of all import attempts, both successful and failing, are recorded in OrderFlow’s import history, so that it is always possible to view how data arrived on the system. The screenshot below gives an example of such a history.

Integration¶

The Integration area of OrderFlow primarily deals with communications with external systems, both at the message level and at the application programming interface (API) level. It also holds the configuration for some of OrderFlow’s internal processes, allowing them to be changed without the need for a new version of the codebase.

Remote Messages¶

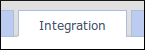

The Remote Messages area of OrderFlow deals with communications with external systems. It shows all messages sent to external systems, and their corresponding responses.

Each remote message is of a certain message type, which is a configurable object that provides flexibility to have fine-grained control over how these messages are sent, and what the responses mean.

For example, an ‘inventory notification’ message type can be configured to be sent over HTTP, with a maximum of 5 retries, and that a successful message is one with HTTP response codes 200 or 401. Additionally, the response body must not contain the text ‘<failures>’.

API Definitions¶

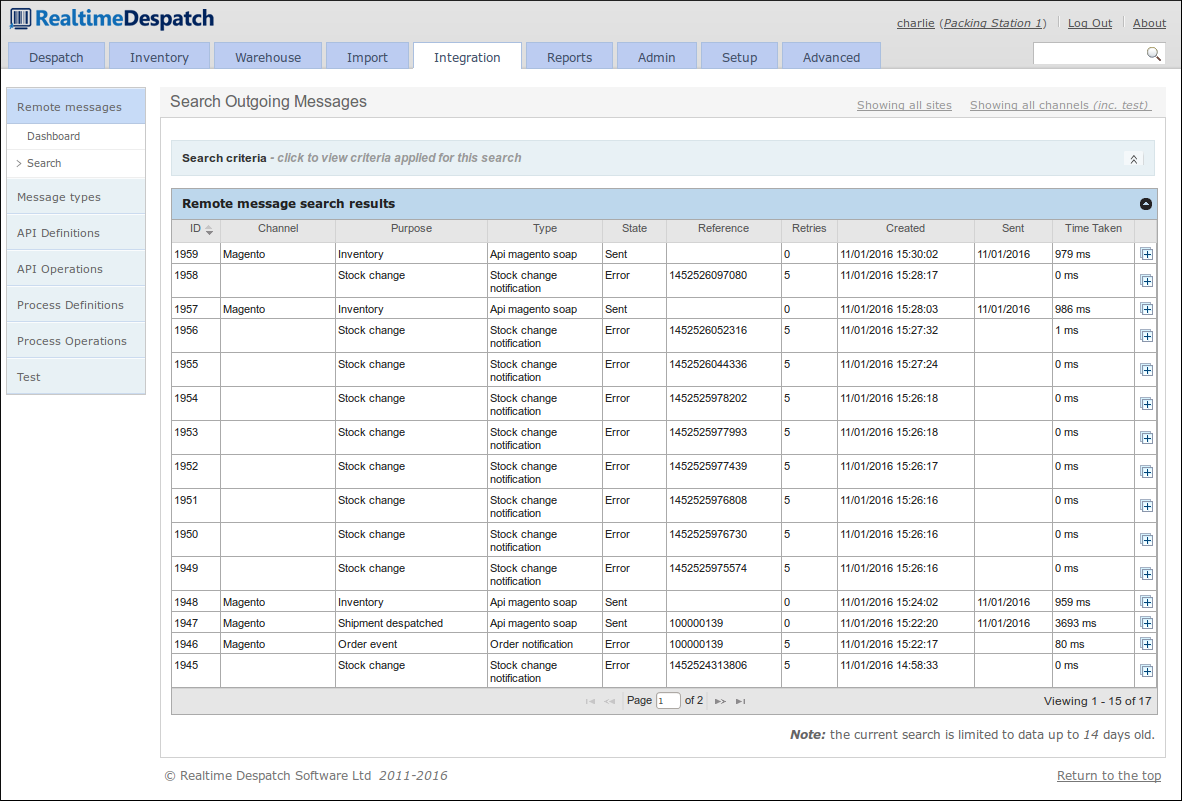

This area of OrderFlow allows an OrderFlow user to configure entire external APIs without code changes. This allows the specifics of external interfaces to be kept separate from OrderFlow’s internal processes and logic. For example, a process to fetch a product catalogue from a remote system may consist of one API request, or it may consist of several requests and responses. OrderFlow’s API configurations keep this detail separate from its core processing.

It also gives a high degree of power and flexibility to change how OrderFlow interacts with remote systems ‘on the fly’. An example of this would be if a particular API to ‘pull’ orders from a remote shopping cart system was configured, and the remote system changed its status codes, then those changes could be reflected in OrderFlow immediately.

Process Definitions¶

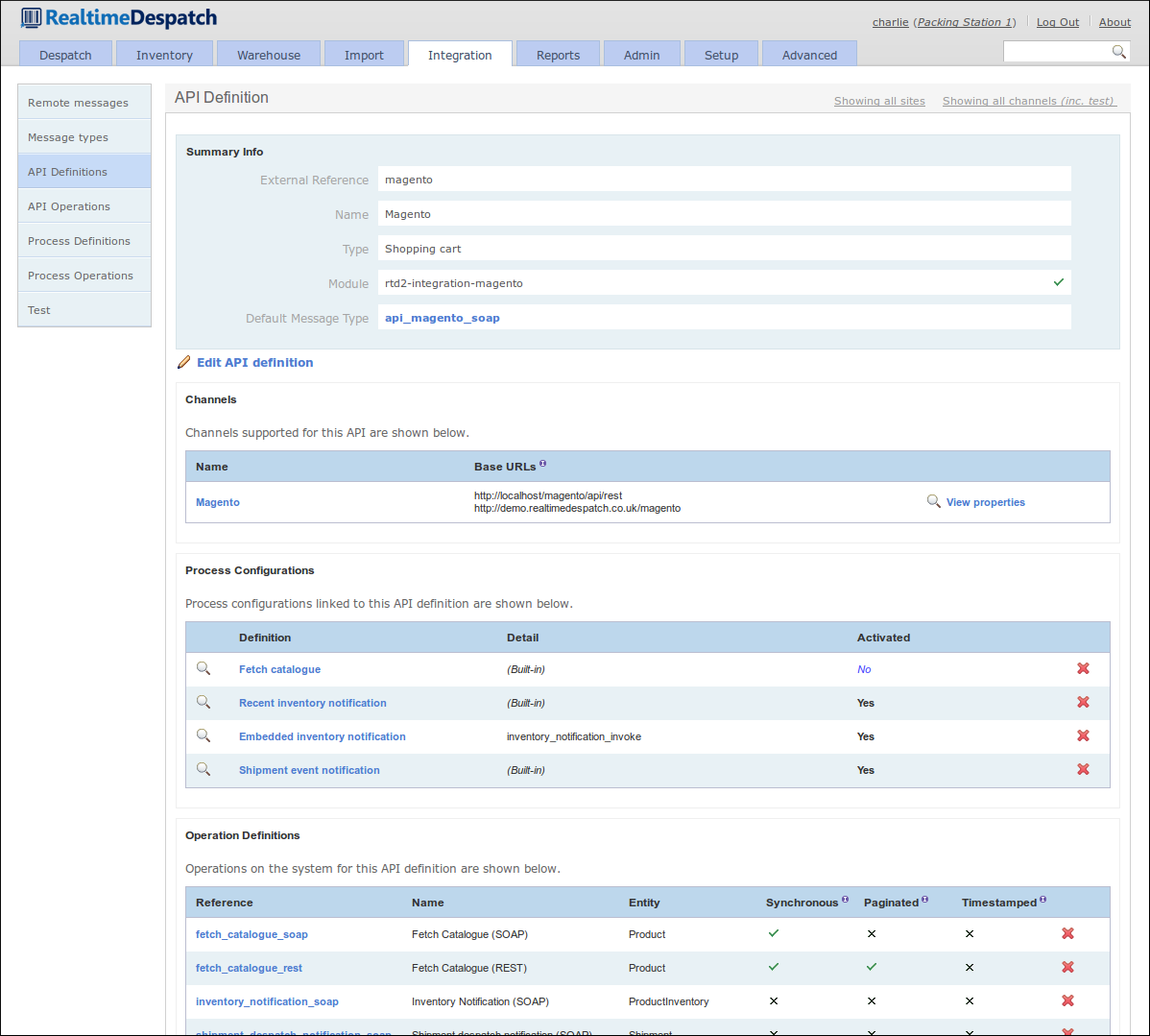

OrderFlow’s ‘Process Framework’ provides a powerful framework to control many of its internal processes, and to define and configure new ones.

The motivation behind this is to enable OrderFlow to be more dynamic in terms of being able to handle changing requirements without the need to develop, test, build and deploy new code. The framework allows logic to be encapsulated in scripts, reports, API operations and other constructs that control the process. Changing the processes has immediate effect.

For example, a process to re-fetch all failed orders might invoke a report to query which orders have failed to import successfully, then it might invoke an API operation to explicitly fetch details of those orders. Depending upon the scope of the process that is being run, a specific API will be invoked. This may be different for different scopes (e.g. sales channels).

Another example is a more simple ‘shipment despatched notification’ process. This would be configured to invoke an API operation to notify a remote system that a shipment has been despatched. The variation here would be in the API operation - one or more remote messages may end up being sent in this case.

Reports¶

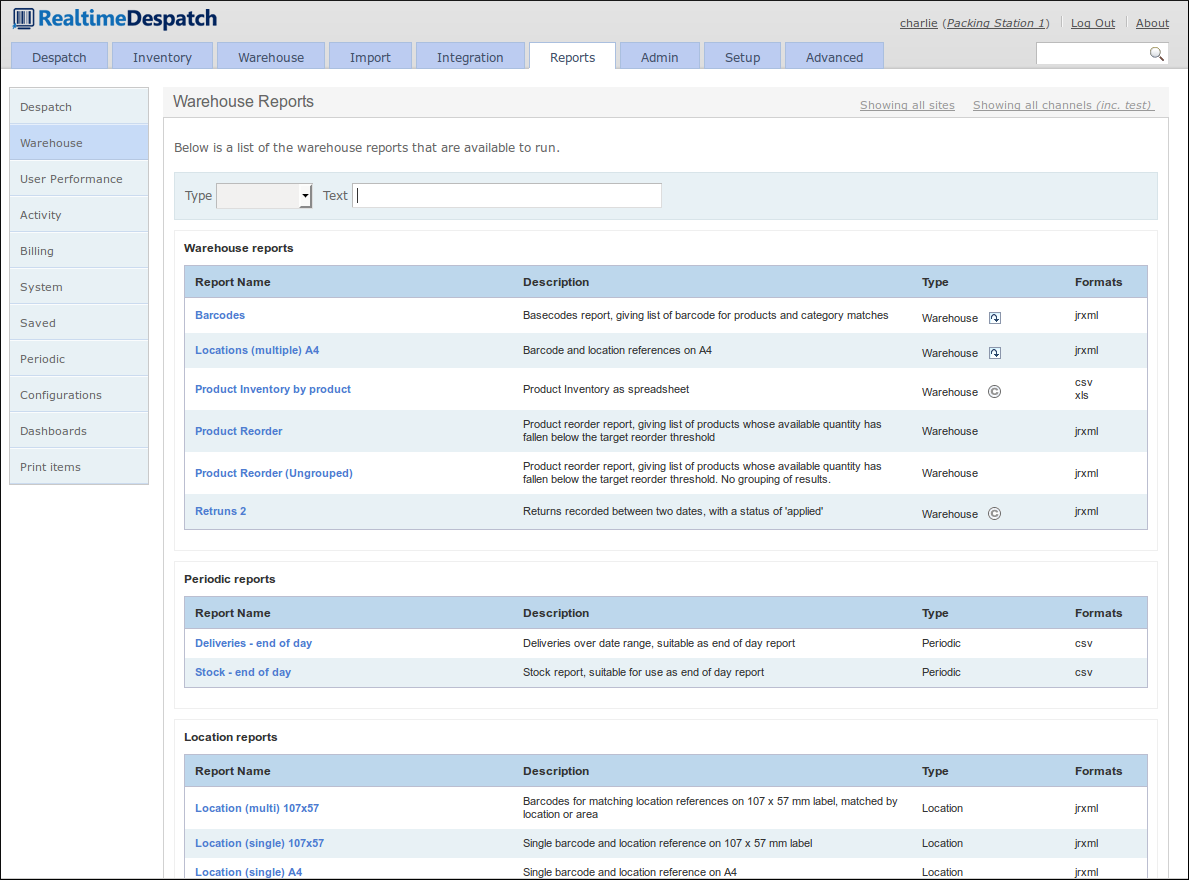

The Reports area of OrderFlow is where all the reports used by the system are configured. Some of them can also be run from the user interface, for display or download.

A ‘report’ is simply the extraction of data from OrderFlow’s underlying database, presented in a particular format. Example formats could be comma-separated values (CSV), Excel spreadsheet (XLS), Portable Document Format (PDF), text or Jasper Reports XML format, which allows for more presentable output, e.g. despatch notes. More details on writing reports are available in the OrderFlow Report Writer’s Guide.

Reports are used both externally, where the data is sent or downloaded to an external system, or internally to OrderFlow, for example to drive certain processes, or to present ‘dashboard fragments’ on the user interface.

The following screenshot shows some of the warehouse reports configured.

The Reports section also contains information on user activity and performance, plus billing configuration and metrics that third-party fulfilment houses can use to bill their clients.

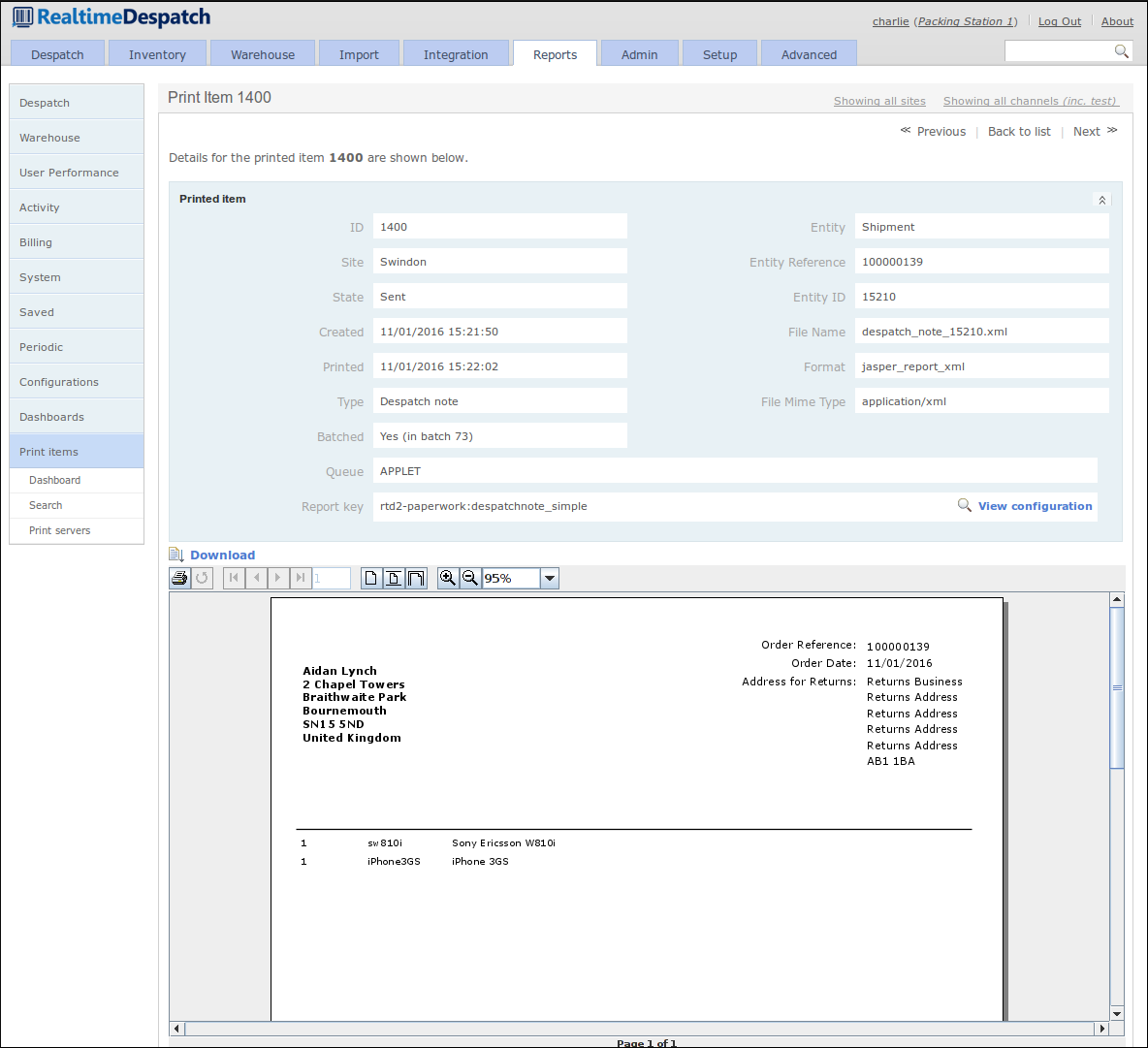

Finally, the Print Items sub-menu contains details of everything that OrderFlow has been requested to print, plus details of which print servers are connected. An example print item detail page is displayed below.

Admin¶

The Administration area of OrderFlow deals with the day-to-day running of the system, from the set-up of user permissions and role definitions, through logging and error management, to displaying which scheduled jobs are running and have run.

It is expected that the system manager would use this menu to keep abreast of any problems encountered in a running system.

This area also shows system performance information and exposes configuration of the archiving and housekeeping operations within OrderFlow. These functions ensure that the system does not have to handle an ever-increasing amount of data, which would itself cause performance problems.

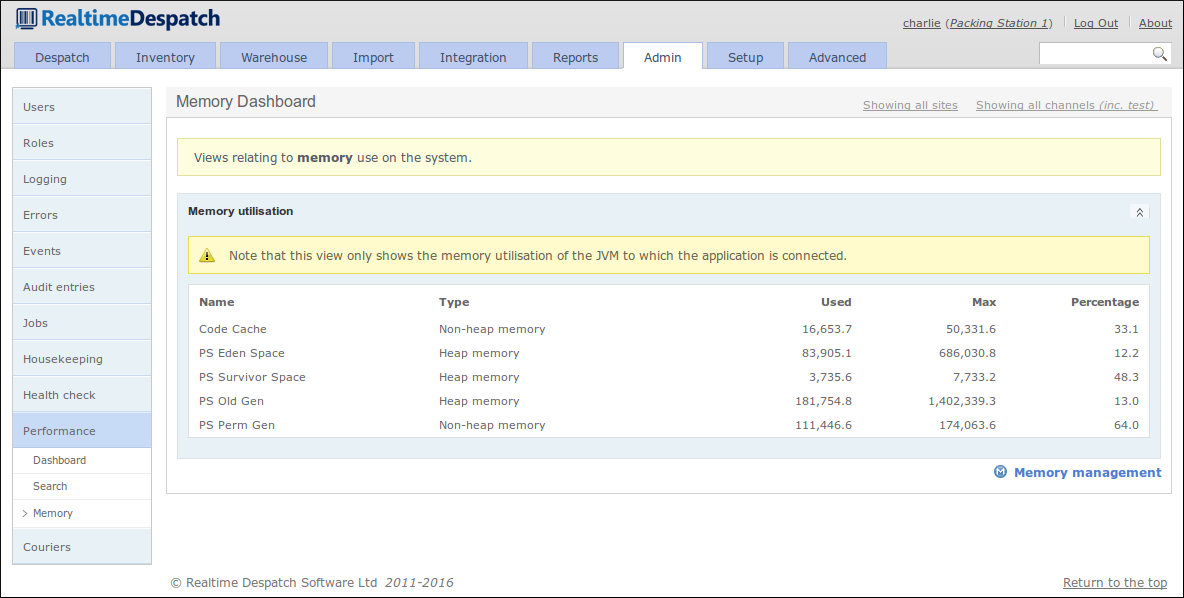

The following screenshot shows the Memory Dashboard, which details the memory utilisation of the OrderFlow instance’s Java Virtual Machine (JVM).

Setup¶

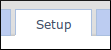

The Setup area of OrderFlow is where the bulk of the configuration relating to business processes is made. This configuration includes what organisations, channels and sites are defined and active in the OrderFlow instance. It also includes all the configurable properties in the system, which are instrumental in controlling how OrderFlow behaves.

There are also sub-menus to configure couriers, batch types, print queues, workstations and other entities. More details are given in the following sections.

Sites, Organisations and Channels¶

As specified in the Order Processing section, OrderFlow can handle orders from multiple organisations, and multiple sales channels within organisations. It is also a multi-site system, supporting fulfilment of orders across multiple warehouses.

Whereas the relationship between organisations and channels is an inclusive one, OrderFlow considers sites to be orthogonal to these, i.e. it is possible for a shipment for a particular channel to be fulfilled in any site.

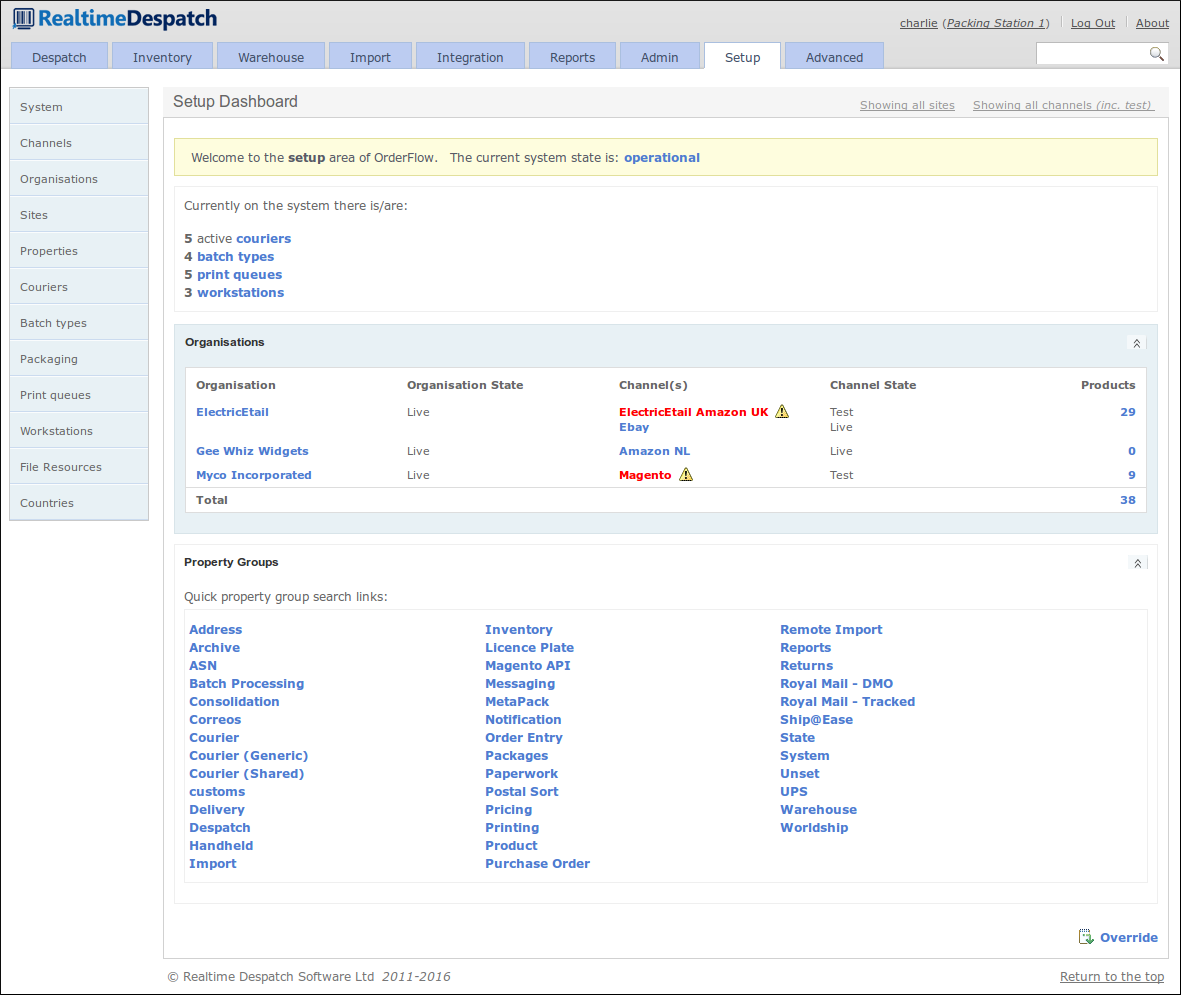

All these entities can be configured under the Channels, Organisations and Sites menus. The following screenshot shows an organisation detail page.

Application Properties¶

Application Properties within OrderFlow enable multi-layered configuration to be applied dynamically to a deployed instance. Typically, when the value of an application property is updated, that will have an immediate effect on the application, without needing to restart it.

Properties can control all sorts of aspects of OrderFlow, from despatch processing, through printing, to external system configuration and much more.

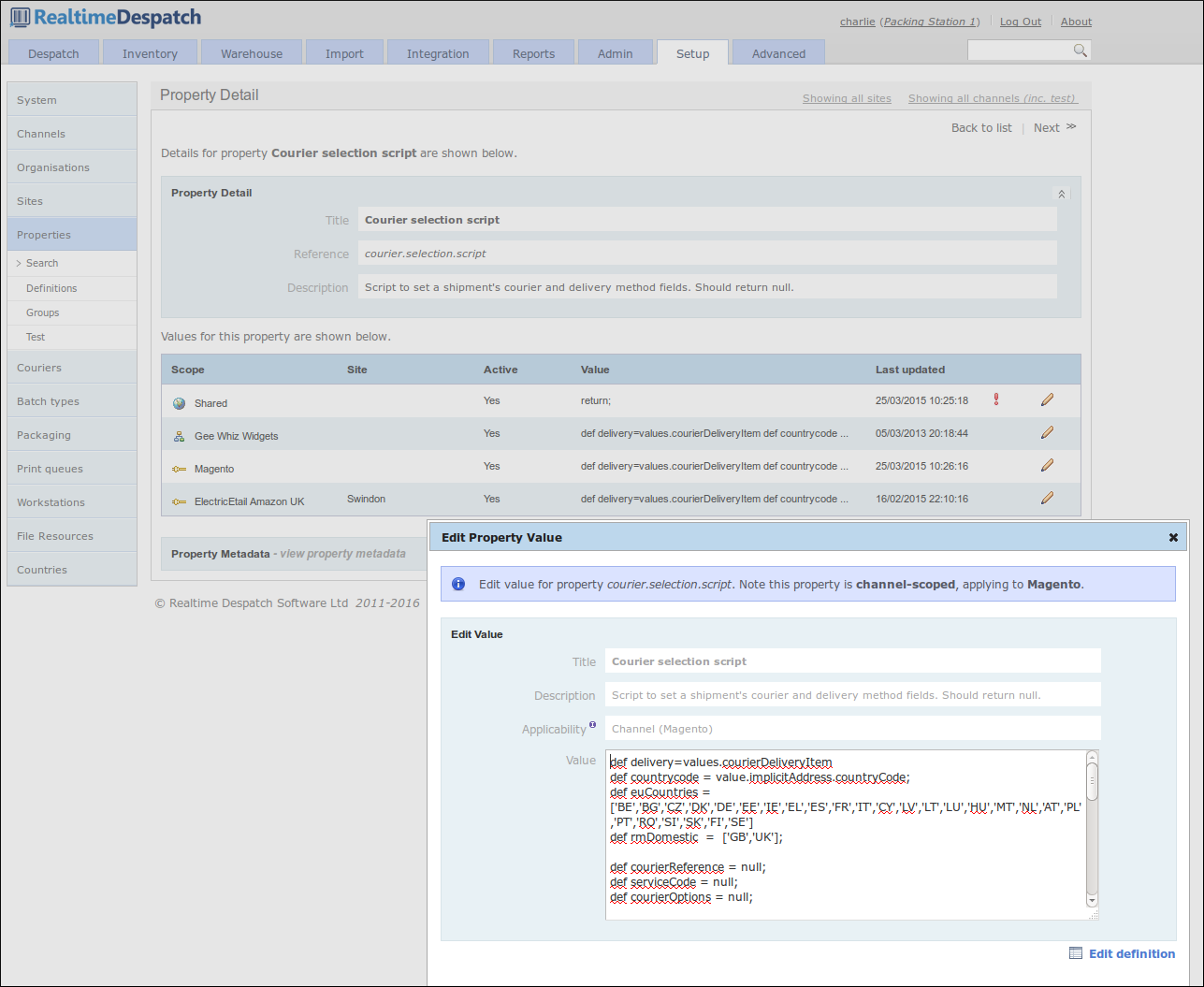

One very powerful aspect of application properties within OrderFlow is that they can be scoped and site-specific. This means that there can be several property values for a single property definition. The values would apply to different scopes (i.e. channels/organisations) or sites, thus allowing system behaviour to differ, depending upon the scope of the processing concerned, and to what site it is applicable.

For example, a shipment might have its courier assigned by a ‘courier selection script’ property. This property could have different values for different warehouses, because only certain couriers actually pick up from each warehouse. It may also differ based on the shipment’s organisation (i.e. the company selling the goods). This would usually be the case, as it is typical that it is organisations who set up the contracts with courier companies. Finally, the script may also differ at the channel level, because only certain channels may attract certain courier services or options (e.g. eBay orders may be prevented from being despatched on a next day service).

The following screenshot shows a scriptable property with different scopes.

Couriers¶

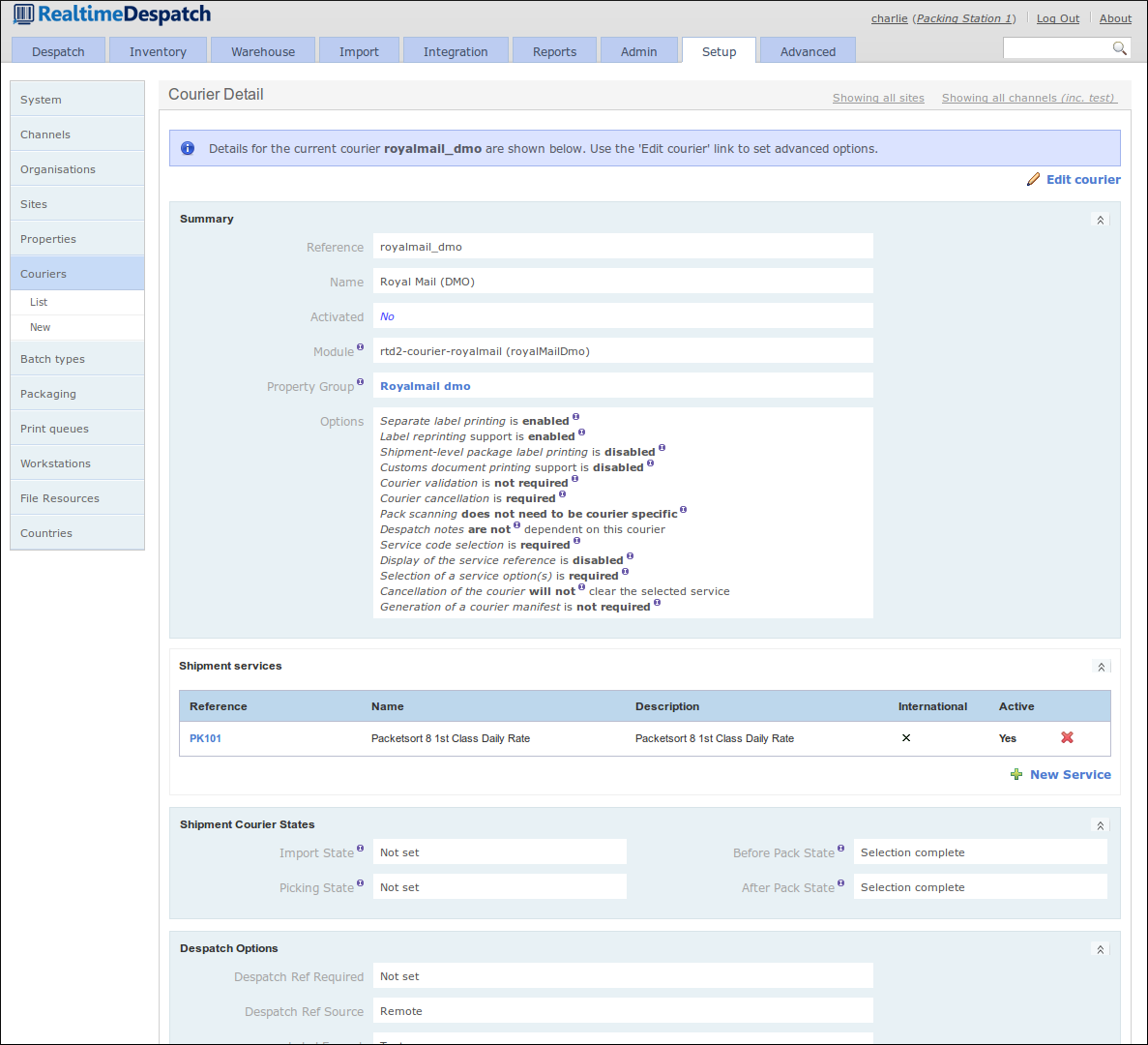

Couriers are integral to OrderFlow - shipments will not go anywhere unless despatched via a courier. Under the Couriers tab, OrderFlow exposes configuration that allows couriers of many different types to be set up.

The configuration required for couriers can range from simple to complex; from a simple ‘printed postage impression’ (PPI) courier, that just requires a label to be printed for a shipment, to a fully-integrated, real-time label-producing courier that has an up-to-date picture of the shipments it needs to collect at each scheduled pick-up.

Couriers can be configured to require despatch references, which can be managed by OrderFlow, or by the courier’s own external system. A courier’s external system can be remotely-accessible as a web service, for example, or it could be locally-installed on a packing station. In this case, communication to the courier’s desktop system is via a print server instance.

Naturally within OrderFlow, couriers can be restricted to be applicable to only certain sites and certain channels and/or organisations (via a script).

A courier can also be configured to have many services (e.g. ‘next day’, ‘3-day’ etc.) and options (e.g. ‘signed for’), to which shipments within OrderFlow can be automatically assigned.

The following screenshot shows an example courier configuration.

Batch Types¶

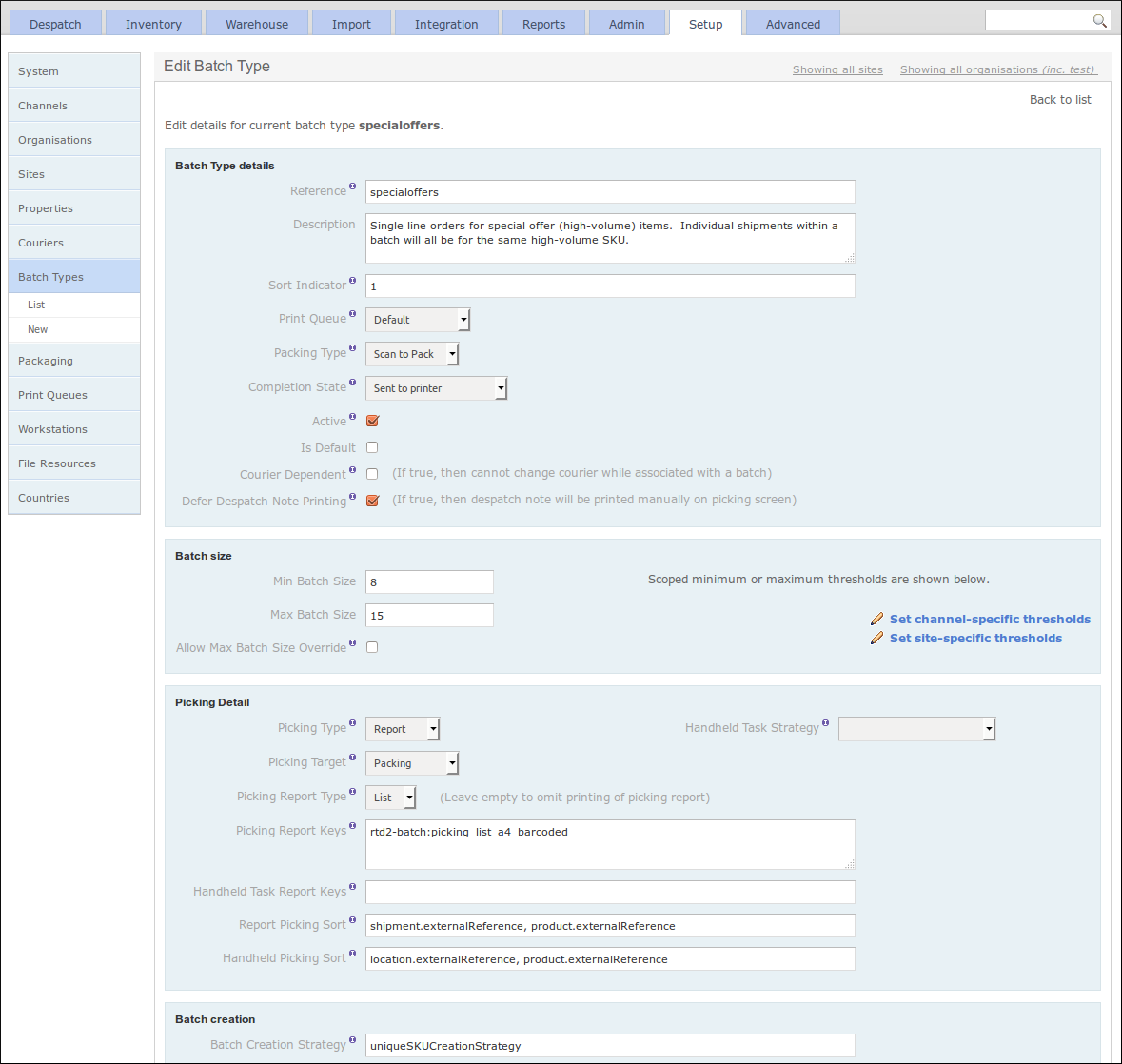

Shipment Batches (or just plain ‘Batches’) are defined in OrderFlow as groupings of shipments that it makes sense to process at the same time. By ‘process’, this typically means pick and pack. For example, high priority shipments might need to be processed separately before other standard priority shipments. Or shipments destined for a particular courier pick-up may need to be processed before other shipments destined for a later pick-up.

Like many things in OrderFlow, the behaviour of batches can be controlled by configuration, allowing warehouse processes to be changed without recourse to developing and deploying new code. This configuration is held in Batch Types, which allow aspects such as minimum and maximum batch size, sorting, picking type, packing type etc. to be set.

More information on batch types can be found in the Warehouse Processes Guide.

The following screenshot shows some of the configurable aspects of a batch type.

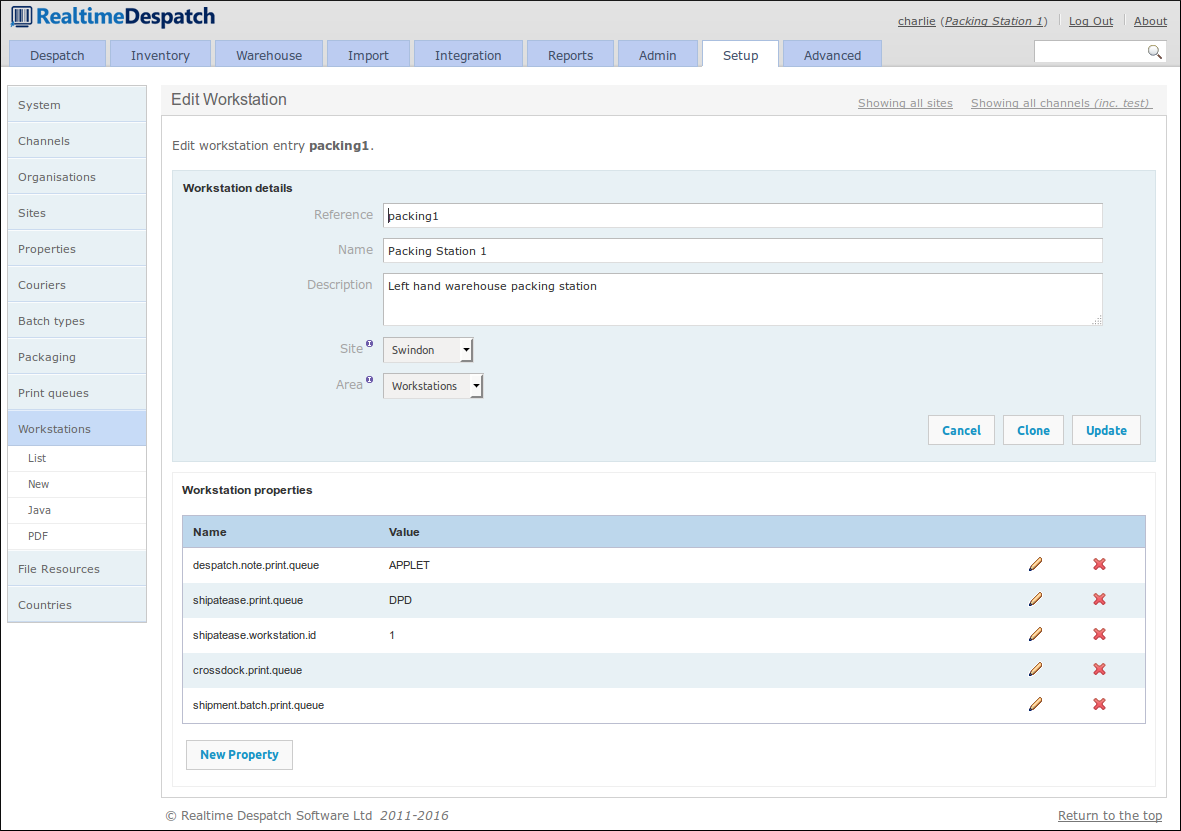

Workstations and Print Queues¶

The set-up of different Workstations in OrderFlow effectively allows it to communicate locally when accessed from a particular workstation. For example, configuring a workstation-specific print queue name allows OrderFlow to route print jobs to that queue, and only the print server on that workstation will be configured to poll that queue. The result is that print jobs will be printed on the printer attached to that workstation.

Print queues are used to route different kinds of ‘printable’ items to the correct place. This is typically a physical printer, but could also be a file system location, for example, when communicating with courier desktop systems. A separate application, called the Print Server, polls print queues to perform the actual printing.

Other Setup Configuration¶

There are other elements available to configure under the ‘Setup’ tab, that have not already been covered.

Packaging Types can be defined, which can be assigned to shipments during the despatch process to record how a shipment was packaged. This is useful for some organisations that want to report on how much packaging they use, to make it easier to order supplies.

It is also possible to define file resources in OrderFlow. These are images, documents or message bundles that can be used in various places in the system. For example, images are used on despatch notes, documents may back product datasheets, and message bundles can assist in producing internationalised paperwork from a single report definition.

Finally, the ‘Setup’ tab is where countries are configured. These are used in despatch operations to determine country-specific properties, usually when communicating with courier systems. For example, one courier may require the ISO 3166-1 alpha-2 (two-letter) code, whereas another may require the ISO 3166-1 numeric code. Both properties can be attached to the country object, making lookup easier.

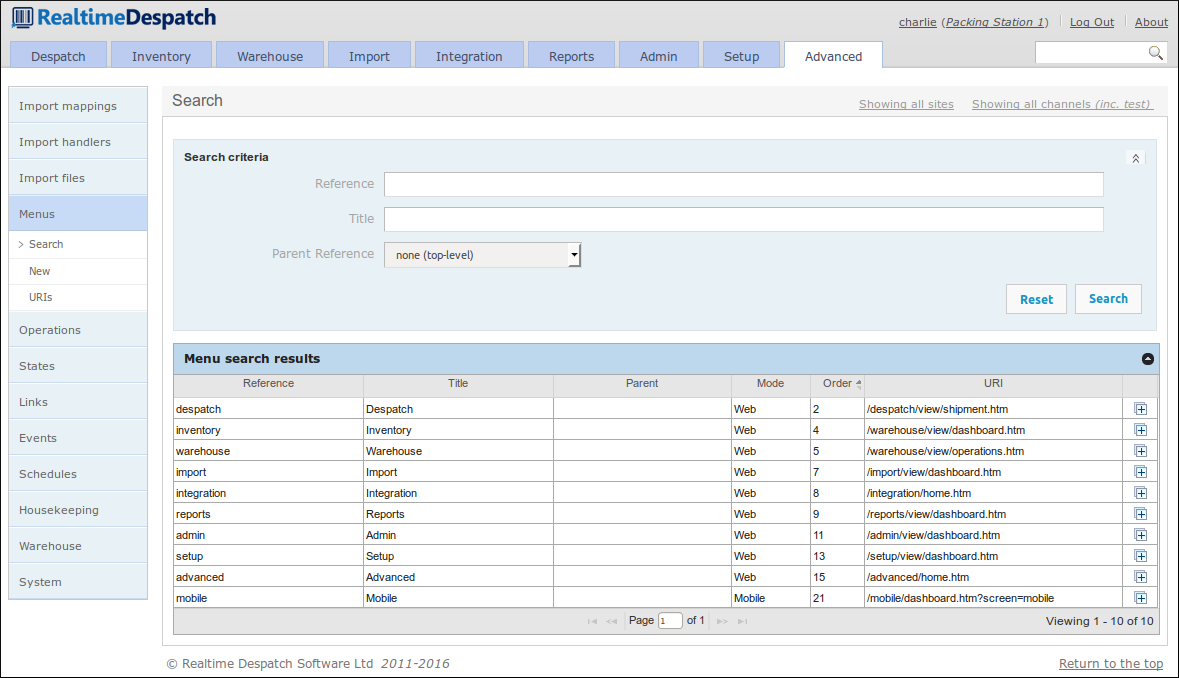

Advanced¶

The Advanced section of OrderFlow is where the more advanced configuration is set. This configuration controls the fundamental behaviour of the application, and as such should only be changed by experienced system administrators or those with special knowledge or training in this area.

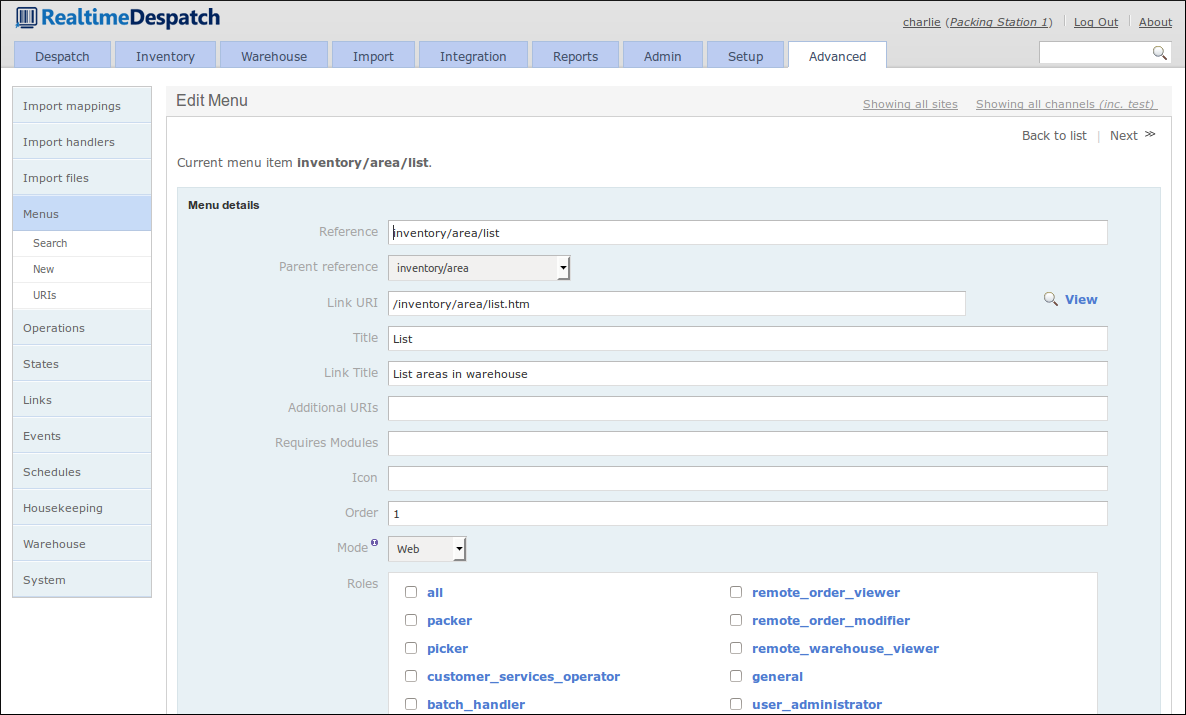

The advanced configuration covers the behaviour of data imports, which define how various data is imported onto OrderFlow. It defines the various states that entities on the system can pass through, and the operations that move them between states.

It includes configuration for events and their listeners, schedules and their handlers, and also when certain links are displayable. In addition to some advanced warehouse configuration, there is the possibility to change certain system features, such as menus, or even which software modules are loaded.

This section provides an overview of the advanced features of OrderFlow in more detail.

Import Configuration¶

Data can be imported into OrderFlow using a variety of mechanisms. The most simple is via OrderFlow’s API, an XML over HTTP interface.

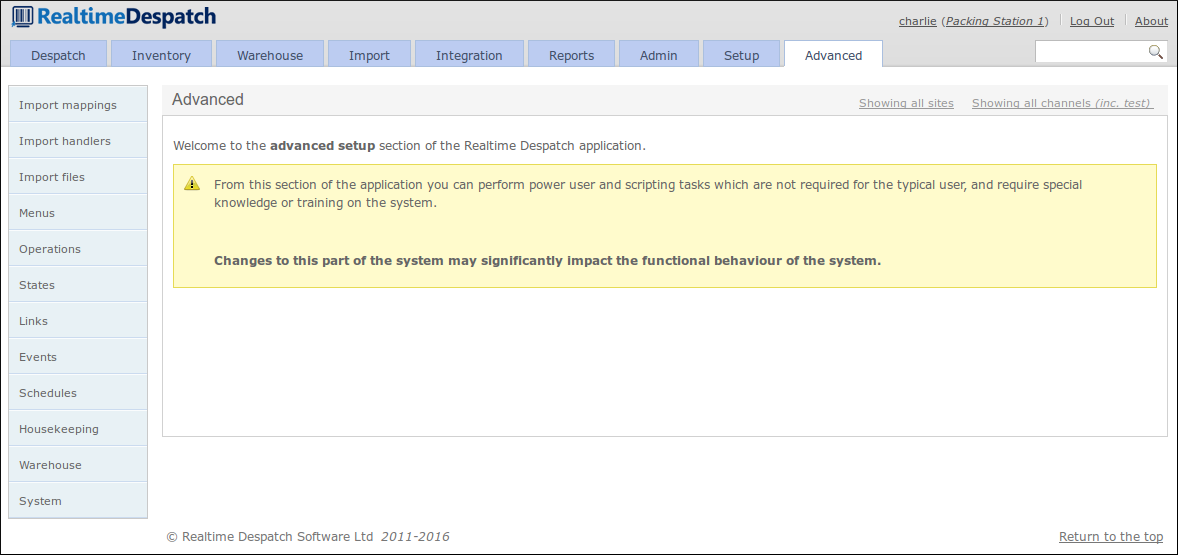

Alternatively, data files can be placed on file system locations accessible to OrderFlow, which in this case would periodically poll those directories and read the files. The configuration to define these locations, and how the files should be read and processed, is held under the Import Files sub-menu.

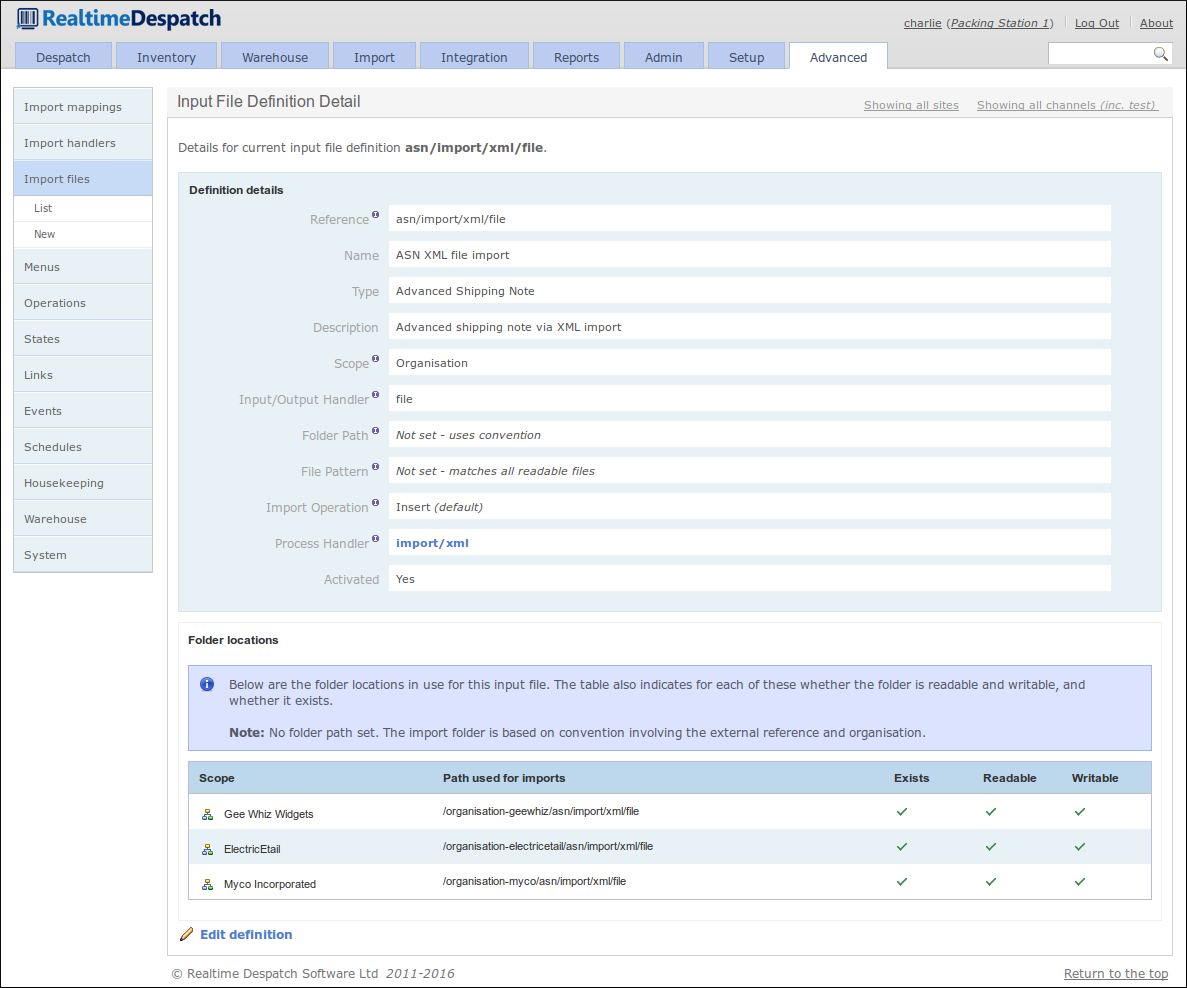

To define how a file should be processed, the Import File definitions reference Import Handler definitions. These reference specific functions within OrderFlow that define how the data is processed. They also define the expected object type, the format and any encoding that the data has. Additionally, a data transformation can be applied at this stage, to potentially label the imported data fields to expected values.

Finally, to ensure that imported data can be further transformed through configuration, each imported data object is passed through an Import Mapping. This is the case for data imported from file, or via the API.

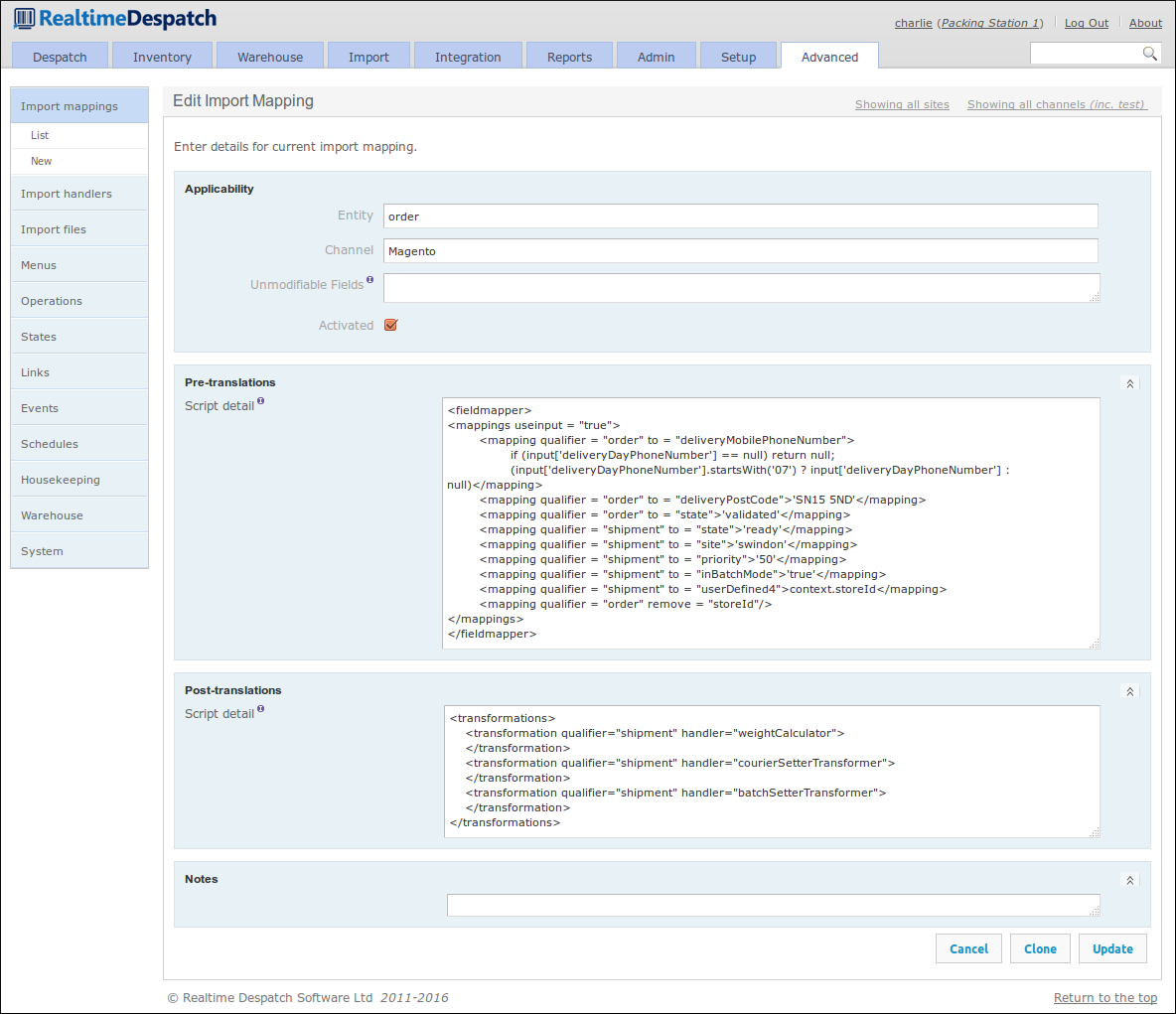

Import Mappings can be defined for an object and scoped by channel, so that data received from different sales channels can be treated differently. These mappings contain a pre-translation script and a post-translation script - the difference between these is expanded upon in the Importing Guide. Each script can contain multiple mappings that utilise the Groovy scripting language, to apply logic and potentially transform individual data fields in the imported data. Additionally, the post-translation script can utilise functionality in OrderFlow to apply more logic or further change the imported entities.

An example of what import mappings can do is the following order import mapping configured for the ‘Magento’ sales channel. The pre-translation sets a few attributes of the order and shipment, and applies some logic when setting the mobile phone number. The post-translation invokes pre-defined functionality that sets the weight, courier and batch type of the shipment.

States and Operations¶

One of OrderFlow’s more powerful features is its configurable state transition model. This means that the processing of the main transactional entities (such as orders, shipments and order lines) can be changed ‘on the fly’ in a deployed system, just by changing the configuration.

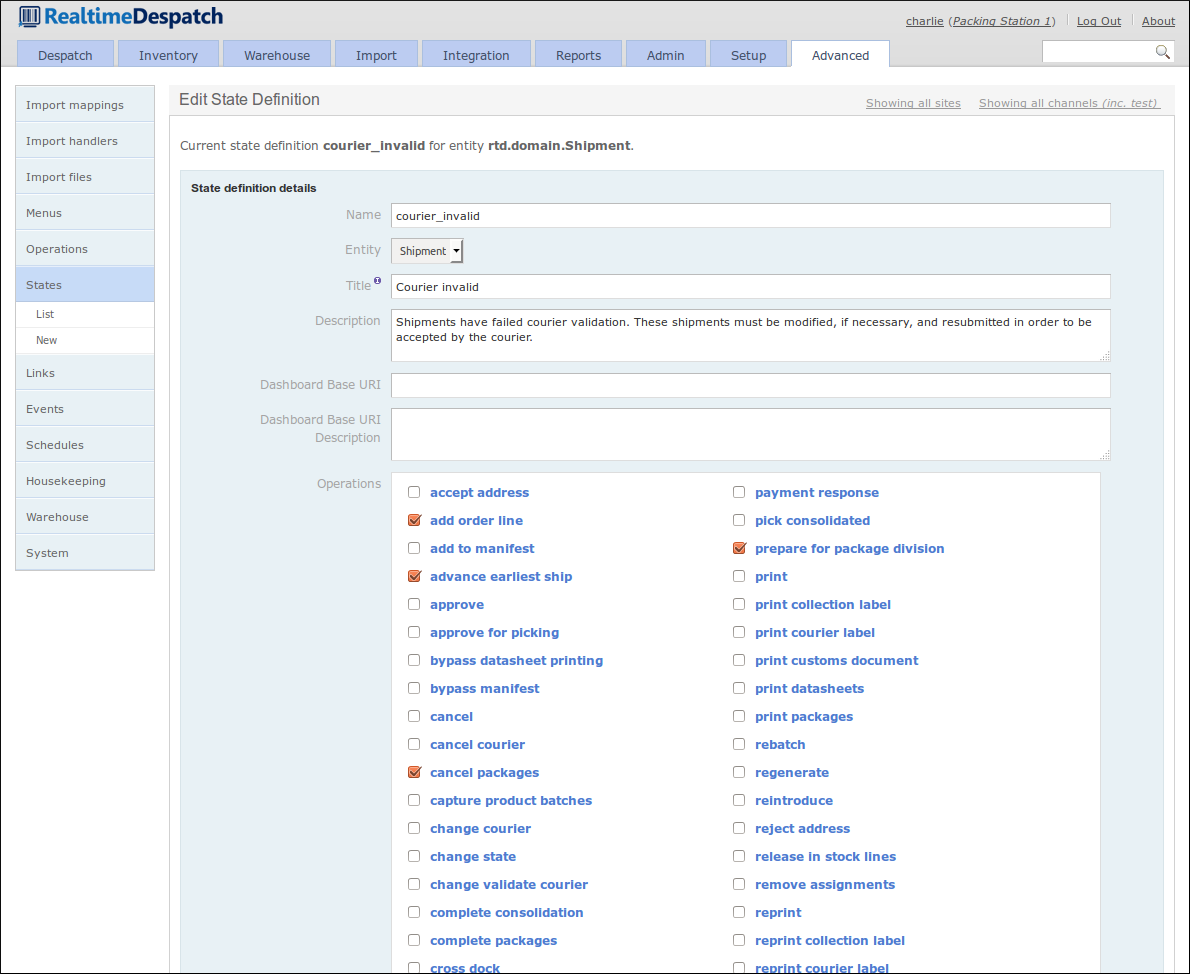

States are defined for orders, shipments and order lines. Each state definition references the operations that are available to an object while in that state. For example, a shipment in the pending approval state only has the ‘approve’ and ‘defer approval’ operations available to it.

The following screenshot shows part of the ‘Courier invalid’ state definition for the shipment object.

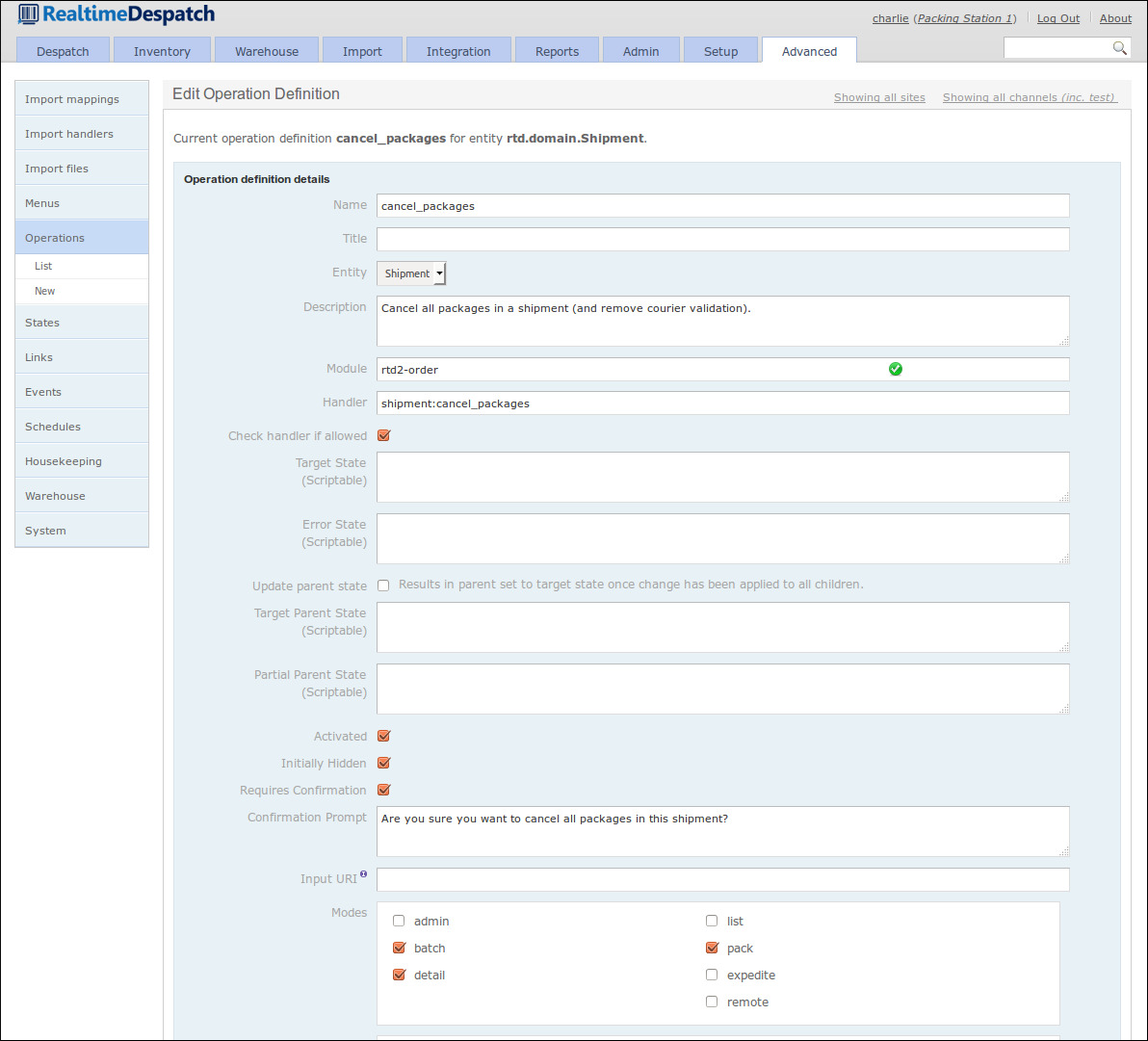

Each operation referenced by a state definition defines what functionality is invoked when that operation is invoked. It does this by referencing known functionality via the handler attribute.

When an operation is invoked, the object is typically assigned a new state. The exact state to be assigned is based on fall-back logic that uses the invoked functionality and the operation definition, which can contain scripted logic. If an object has a parent object (e.g. a shipment’s parent object is an order), then the parent object’s state can be defined to be automatically updated if all its children have reached the operation’s target state.

An operation definition can also be assigned modes, which determine where in the desktop user interface the operation will be made available. For example, operations with the mode ‘detail’ are presented to the user as buttons on the object detail pages.

Similarly to menus, operations can be restricted to certain roles, so that more advanced operations are not invokable by all users.

Links¶

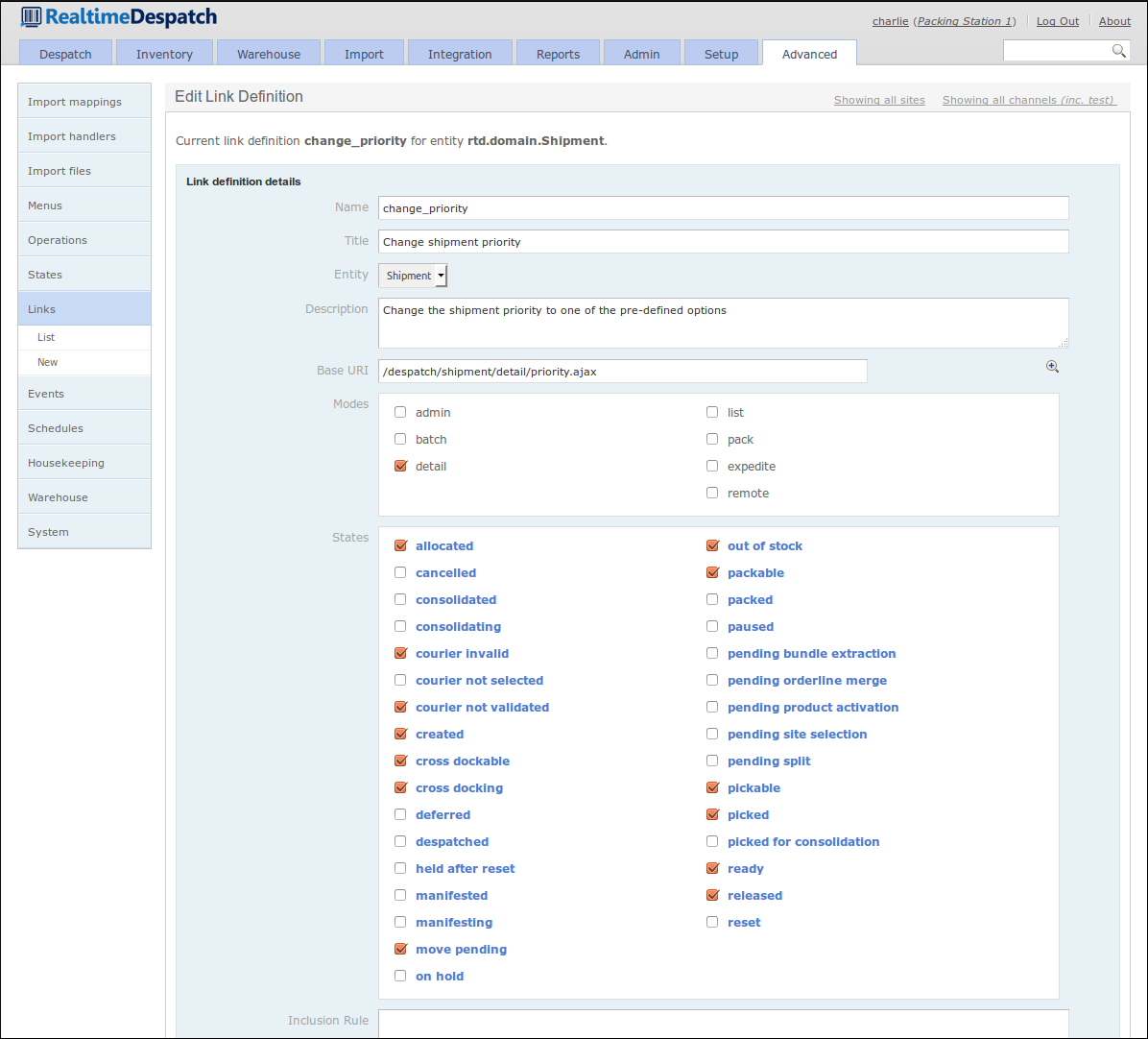

A Link definition in OrderFlow is a configurable object that gives control over which links (i.e. hyperlinks or icon links) are displayed on the desktop user interface. Each link defines a URI to where it leads, if invoked.

Each link definition applies to an object (e.g. shipment, order etc.), and defines the states in which the link should be displayed. Similarly to the operation definition, a link definition can also be assigned modes, which determine where in the desktop user interface the link will be made available.

The following screenshot shows a ‘Change shipment priority’ link definition for the shipment object.

Events¶

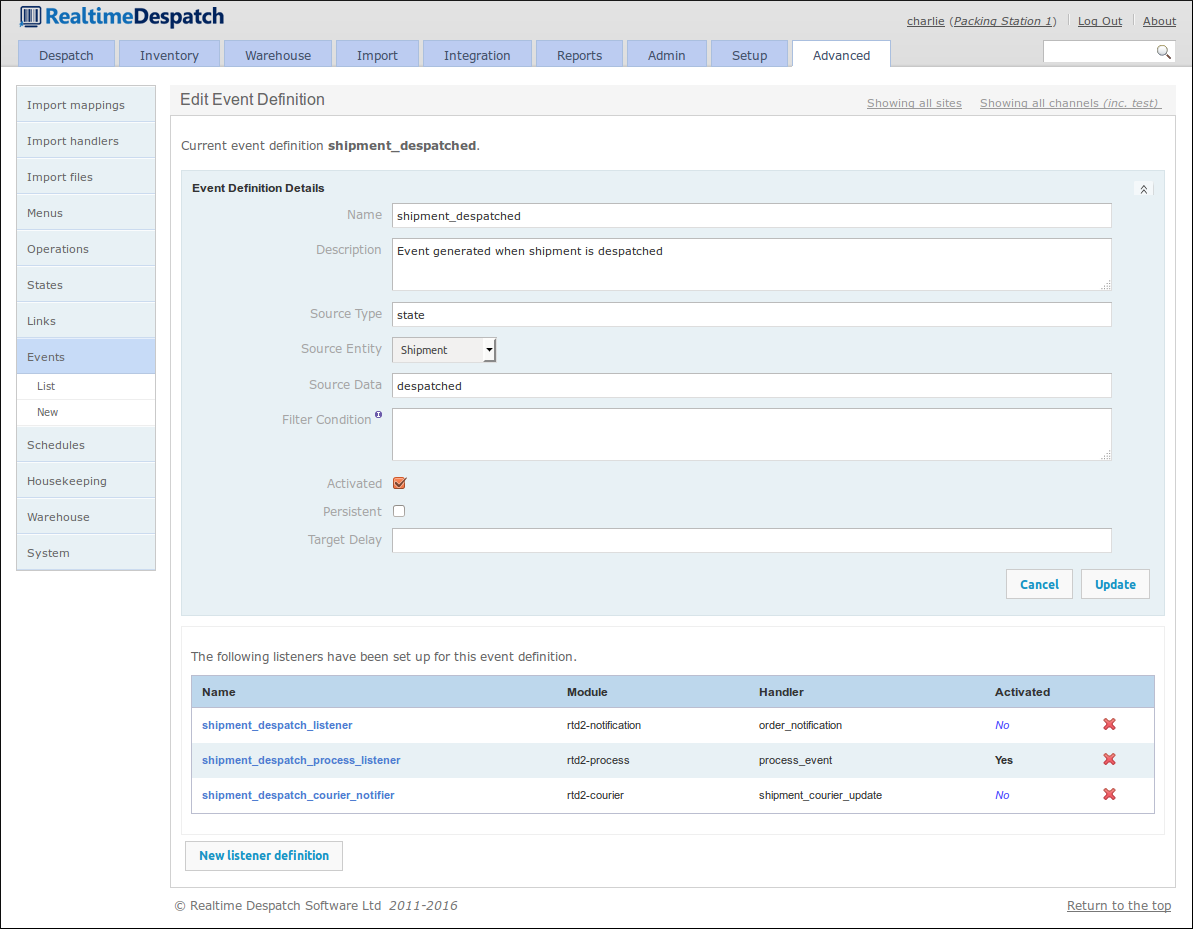

The Events section of OrderFlow is another area that adds to the power and flexibity of the system. As various changes to entities occur, OrderFlow can fire events, which contain details of what has just happened to the object concerned.

Event definitions and their listeners define what functionality should be invoked when certain events fire. For example, if a shipment has just been marked as despatched, a ‘shipment despatched’ event is fired, and the event definition for this event invokes each of the configured active listeners for this event. One of these listeners may ultimately notify an external system (e.g. a shopping cart) of this event.

An event definition can support a comma-separated list of sourceData values. This allows the associated event listeners to be fired for each of the values supplied. For example, if you want to fire the same event listener when a shipment is in the state packed and despatched, the value for sourceData would be packed,despatched.

The configurability of event definitions and their listeners allows system behaviour to be changed without needing to develop and deploy new code.

Schedules¶

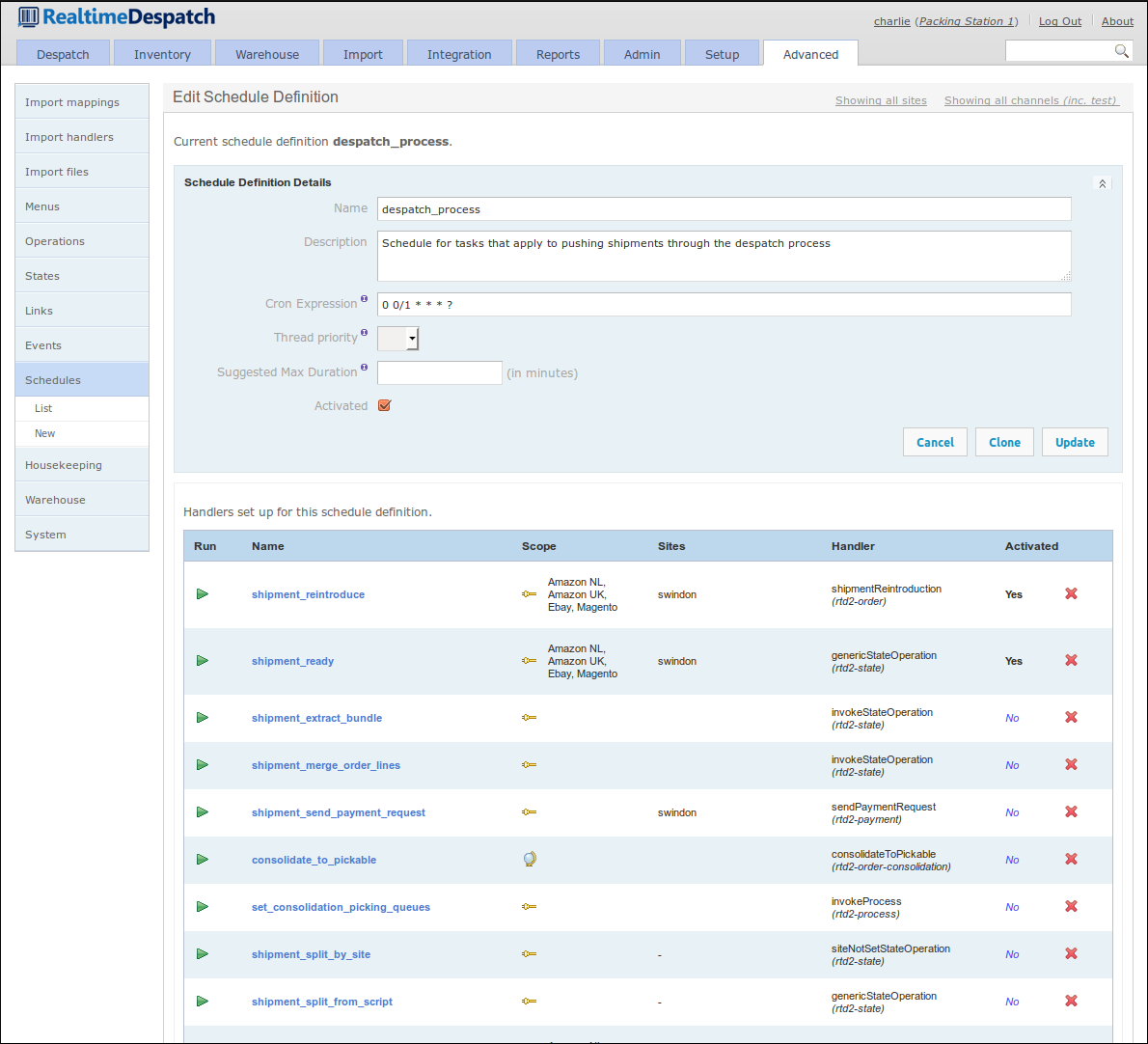

In order that OrderFlow can present a real-time picture of warehouse activity in a performant manner, some of its processing needs to be done in the background, i.e. not in response to user or external system action. For example, it would soon fall over if it tried to calculate the overall picture of inventory levels following every single stock change.

It is for this reason (amongst others) that OrderFlow has a scheduling capability, which allows for processes to run at predefined times throughout each day. This capability is configured in Schedule Definitions, which are each associated with a cron expression, to define when the schedule will fire.

Schedule Handlers can be attached to schedule definitions - these reference known functionality in OrderFlow, which will be executed in order when the schedule fires. Each handler can be restricted to certain sites, organisations and channels, if required, and each can be configured to pass certain parameters to the invoked functionality. Additionally, each handler invocation can be configured to repeat for a defined duration or count.

Schedule handler invocations can be recorded in the database for later inspection. This provides useful evidence of system behaviour, if necessary.

The following screenshot shows a schedule definition, which fires every minute and invokes the active handlers.

Warehouse Configuration¶

The advanced Warehouse section allows the configuration of various warehouse-related aspects of OrderFlow. This section gives an overview of some of these aspects.

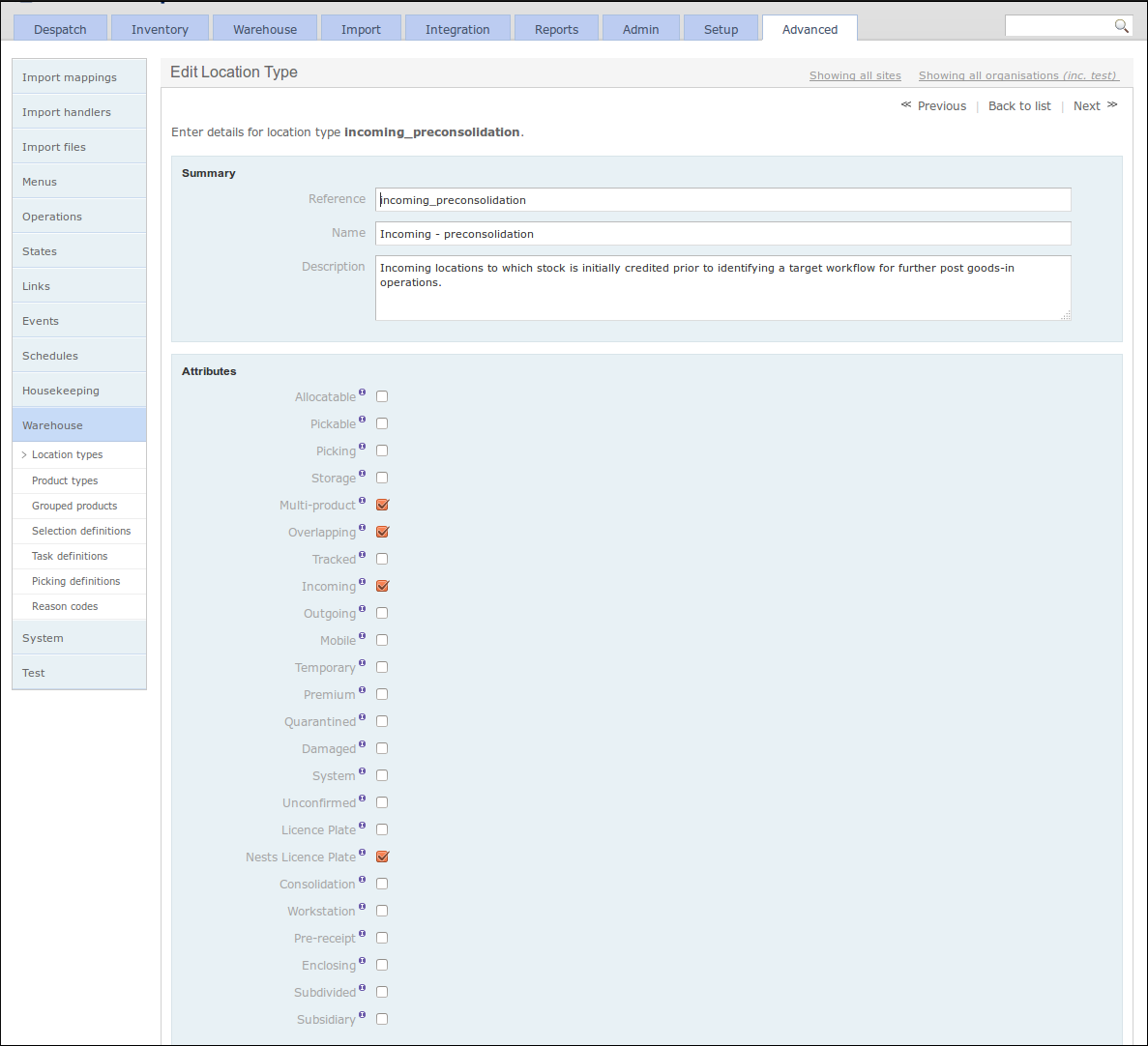

Location and Product Types¶

Location Types and Product Types can be defined here; these enable a high degree of flexibility for organisations to set up the warehouse in the exact way that they want to, as these types essentially add a layer of abstraction in front of the attributes that a location or product may have. This allows subtly-different types to be defined, which will influence warehouse behaviour in respect to location and product usage.

The following screenshot shows the definition of a ‘Incoming - preconsolidation’ location type.

Location Selection Definitions¶

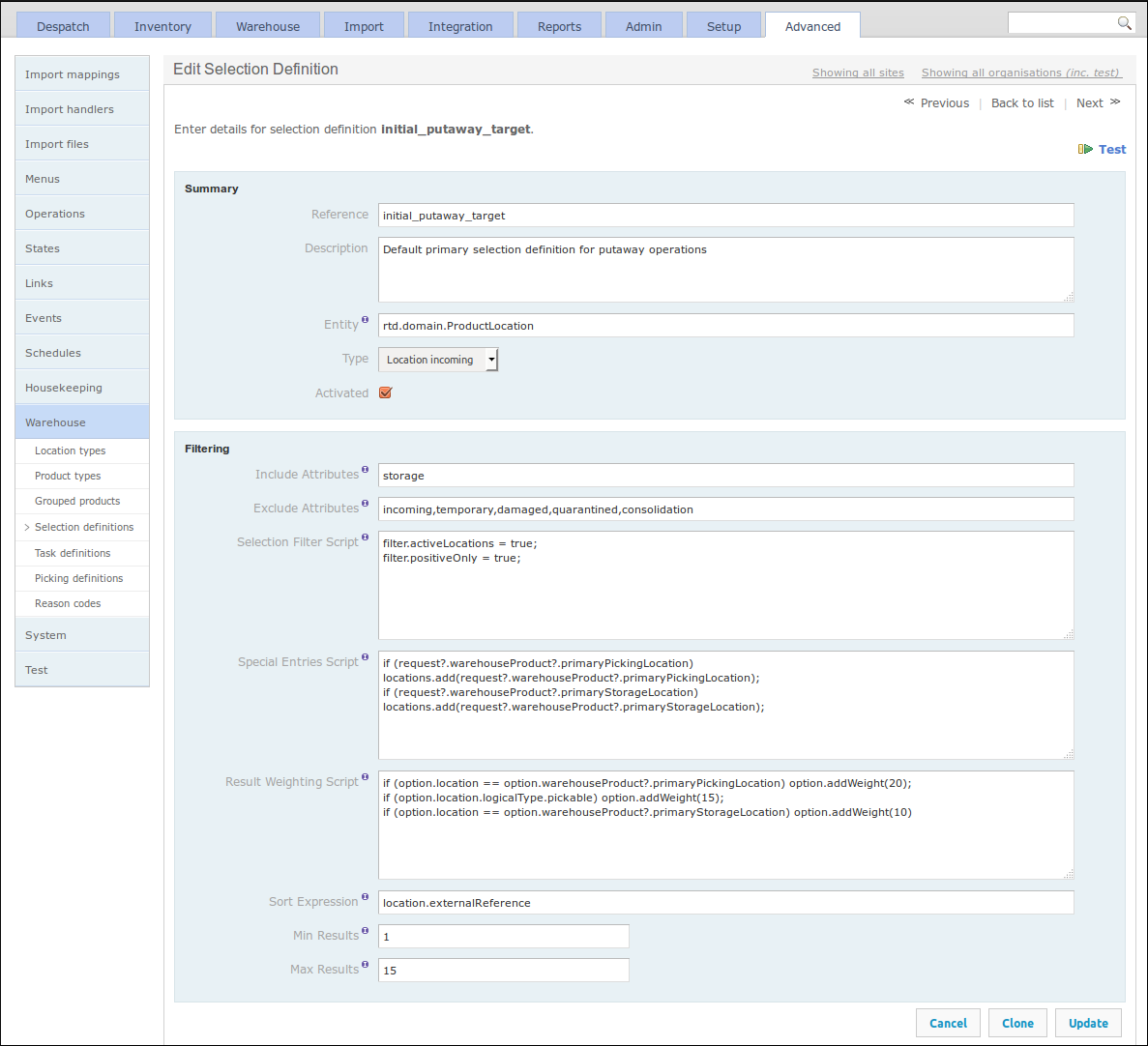

Location Selection Definitions (or just ‘Selection Definitions’) expose a mechanism to select warehouse locations for certain tasks, in a configurable manner. These selection definitions can then be used in various places in OrderFlow, and as such can be changed or adjusted if necessary, changing the behaviour of the application without the need for any code to be changed.

A selection definition can filter locations by their attributes, either by inclusion or exclusion. It also has a filter script, which allows further flexible configuration to be applied via hooks into the search objects. Sorting, weighting and results sizes can be configured to give fine-grained control over the location selection results.

The following screenshot shows an example of a location selection definition.

Task Definitions¶

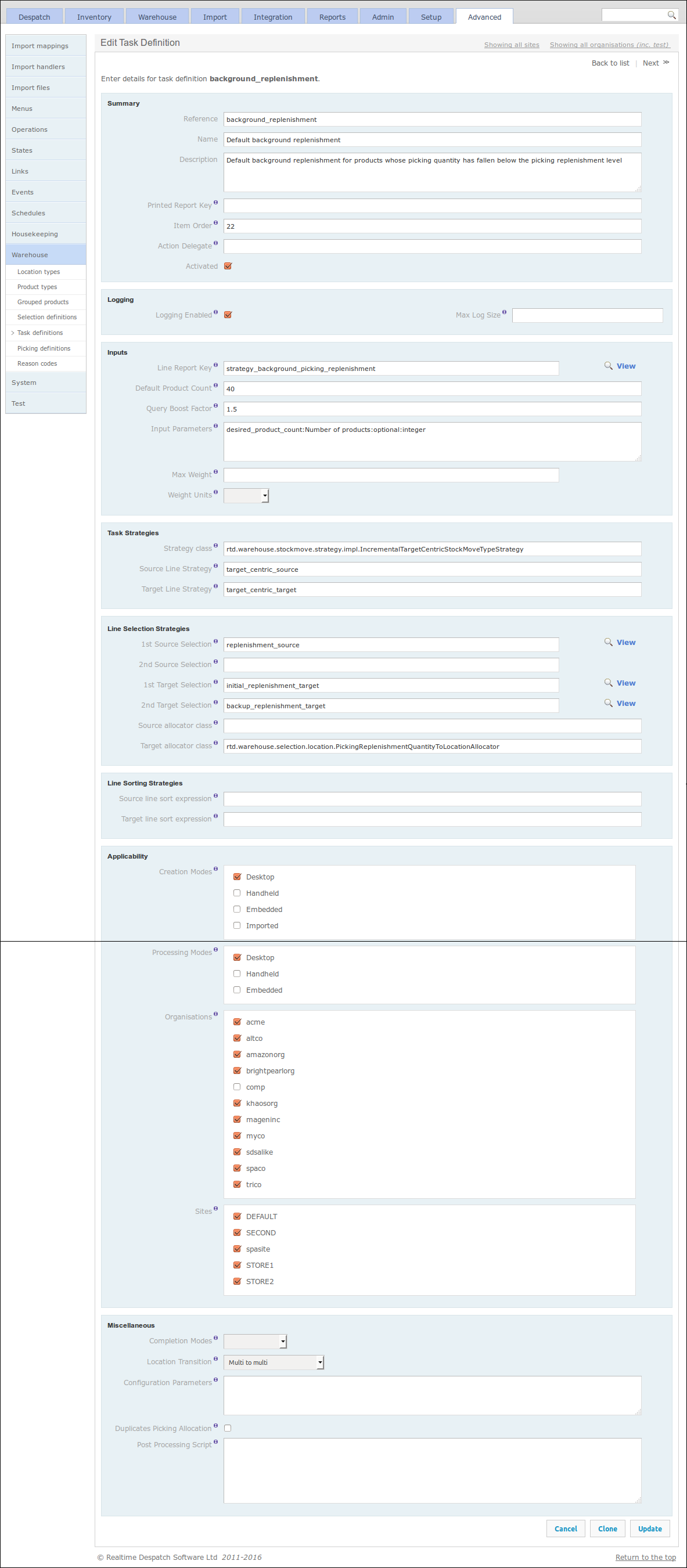

Stock move tasks, as introduced in the Stock Move Tasks section, are instructions to move stock from one location to another. To determine how much of which stock to move from which location to which other location, OrderFlow uses the configurable Task Definition object.

Such an object facilitates the automatic creation of the right stock move tasks at the right time. The task definition defines which built-in strategy will be used when creating tasks of this type. This strategy may utilise a report to determine which lines to include in the task, or it may use a configured line selection strategy, which references a Location Selection Definition (as detailed in the previous section).

Sorting, scoping and creation and processing modes can also be defined in a task definition, as well as other options. See the Warehouse Processes Guide for more information.

The following screenshot shows a task definition for the ‘Default background replenishment’ task.

Picking Definitions¶

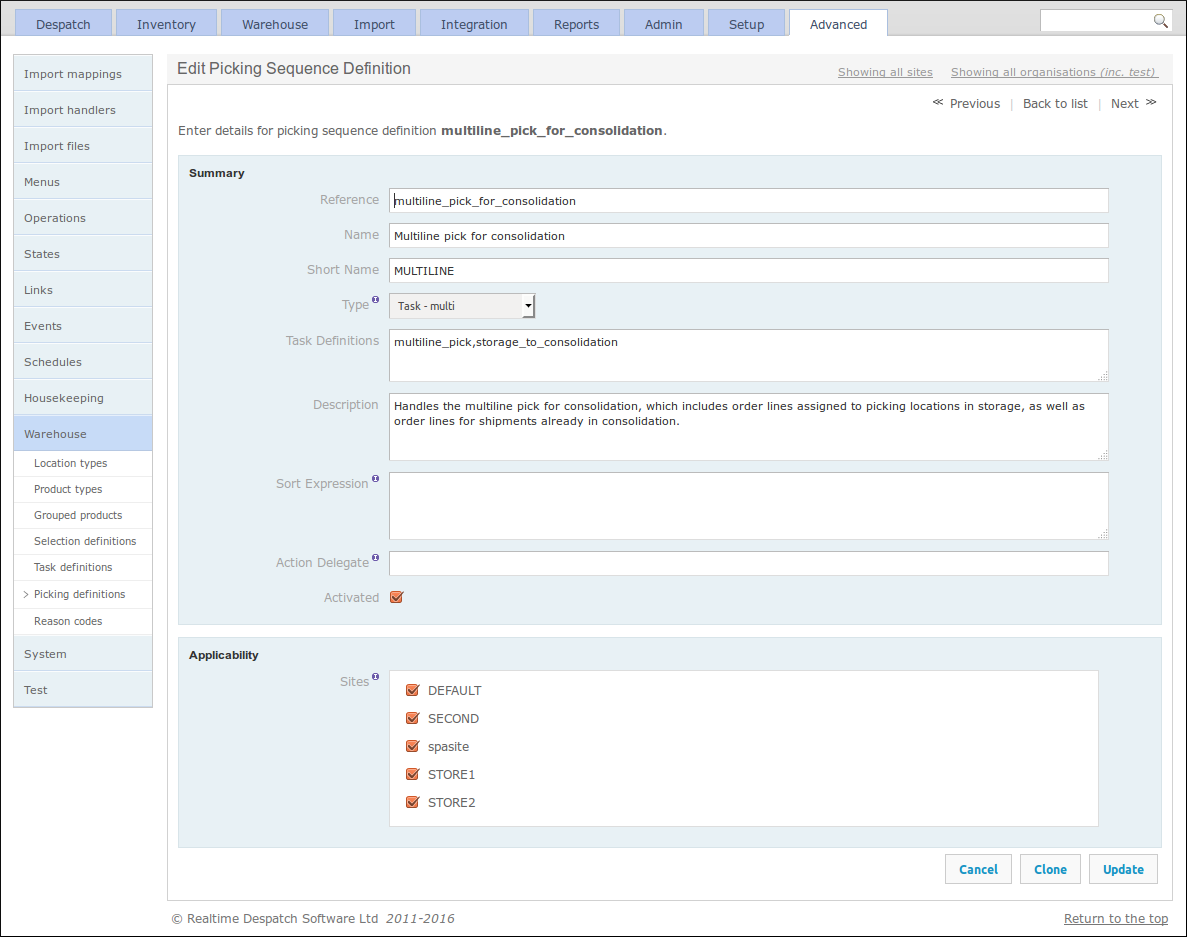

OrderFlow’s Picking Sequence Definitions can also be configured under the Warehouse sub-menu. Picking Sequences are a mechanism to combine different task types into the same picking operation, to improve the efficiency of picking operations in the warehouse.

A picking sequence definition can be assigned a sort expression, and also hook into specific logic to be invoked when the picking sequence is complete. More details can be found in the Warehouse Processes Guide.

The following screenshot shows an example ‘Multiline pick for consolidation’ picking sequence definition, which combines the task definitions ‘multiline pick’ and ‘storage to consolidation’.

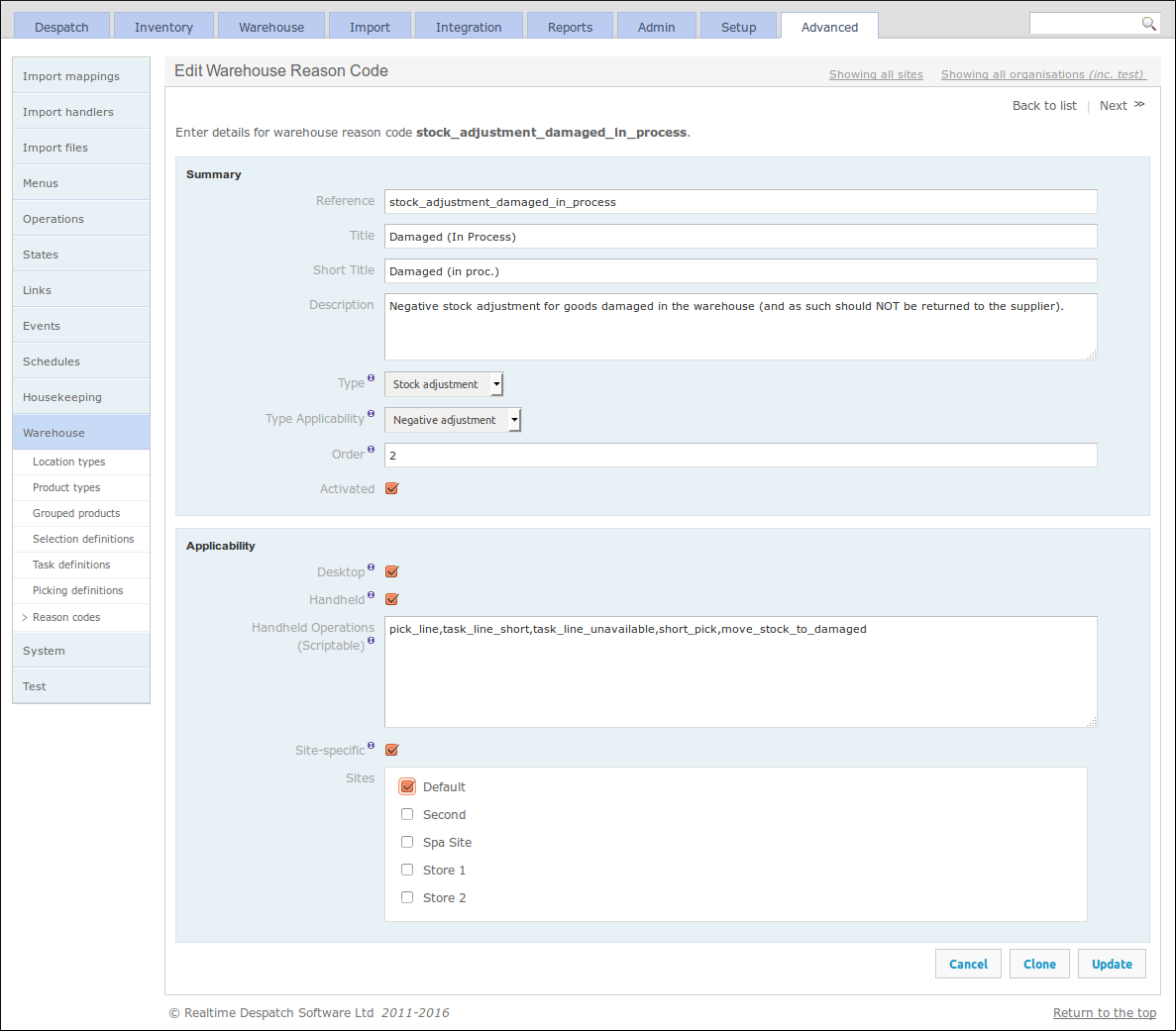

Reason Codes¶

Finally, Warehouse Reason Codes can be configured under the Warehouse sub-menu. These provide a flexible way of controlling certain aspects of the warehouse process, such as what options are presented to users when making stock adjustments or setting return reasons. As the following screenshot shows, their applicability can be restricted by site and also restricted to certain handheld operations.

System, Housekeeping and Test¶

The Housekeeping area of OrderFlow contains configuration for dealing with purging and archiving of data from the system. Purging data allows non-critical event data to be permanently deleted from the system, whereas archiving allows business transactional data to be removed from the system, potentially to the file system or another database, for possible later business analysis.

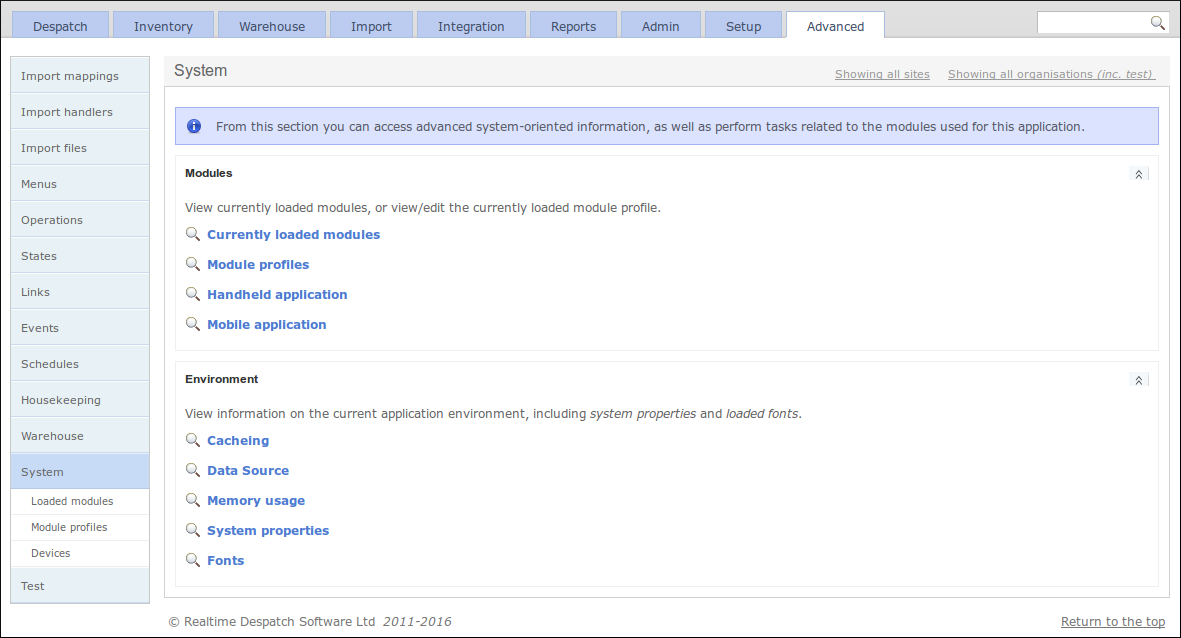

The System sub-menu includes the configuration for which software modules are loaded on the currently-running instance. OrderFlow is a modular application, which means that, on top of a set of core modules, there are many optional modules that deliver specific functionality. The optional modules are enabled according to what functionality a customer requires. It is possible to change the module configuration, but only those modules that have been commissioned by Realtime Despatch can be enabled.

Additionally, this section includes the display of various system environment properties, memory usage and loaded fonts. It also provides some control over resetting the system cache and data source.

Finally, the Test sub-menu supports the testing of OrderFlow itself, by allowing certain data sets to be loaded, if available on the underlying file system. This feature is only useful in a development environment, i.e. it is not to be used on deployed instances of OrderFlow.

Further Reading¶

OrderFlow Magento Integration Guide

Printing and Workstation Setup Guide

OrderFlow Report Writers’ Guide

Warehouse Processes Guide (to be published)